Project Overview

I like AI and enjoy playing around with it. I have been experimenting with stable diffusion, and at one point I thought to myself, "Hey, if I could run the prompts and settings dynamically, this would be a very good way of creating an AI filter app.".

Well, it turns out stable diffusion can run in API mode! And so I quickly designed the app and started implementing it using React for the frontend and FastAPI for the backend.

This project was more about curiosity than trying to bring it to completion.

I succeeded in getting the core functionality working; the filters work! The user is able to:

- Upload an image (or take a new one from the phone camera).

- Select filter

- Send it to processing.

- Receive the image with the filter applied.

- Save the image.

About the Filters

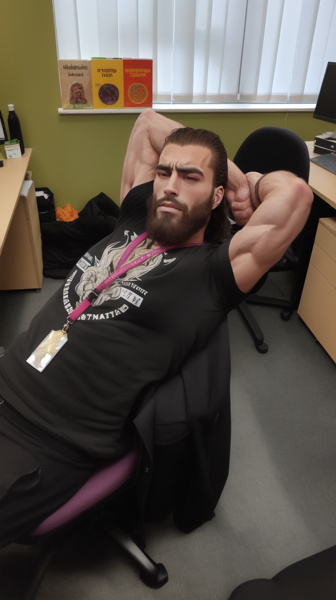

Currently, the app only has one filter, one that makes the user more "manly".

While there is currently only one selectable filter, the system for more filters is already in place and working, including filter settings (for example, the strength of the "manlification").

It is trivial for me to add more filters; each filter is a simple Python file that sets the right settings, prompts, and control-nets.

The Queue System

It takes time to process an image (about 4 seconds). Because of that, the user won’t receive the image right away. There might also be other users attempting to generate an image at the same time. Because of this, I have included a queue system. When the user sends an image, a websocket connection is created. The use gets put on a standard or priority queue, depending on their account type. The websocket updates the user on how many users are ahead of them in the queue, until eventually it's their turn and the finished image gets sent to the user.

Important Considerations

Safety

AI filters through local instances can be tricky to use, especially when using publicly available models. Because of this, I had to do some safety testing on the filter and would have to do so for any future filters I would plan to add.

Considering the audience of an application of this type, I had to really make sure the model and trained Lora I use for the filter will never create anything unsafe for work, a risk that's often present even with the most innocent of prompts when using public models.

Luckily, it never did. The base model I chose is not meant for this kind of image in the first place, which makes it quite safe to use. Furthermore, even though it wasn't needed, I decided to add a lot of keywords to the negative prompt that would further help avoid such issues.

Business

While I never intended to make this app into a commercial project, I always approach the design with the consideration of it potentially becoming so in the future.

The business consideration of this project was operational costs, mainly the cost of GPU time. While I can process the images on my personal computer in less than 4 seconds, it would not be ideal to do so for production. Furthermore, to offer a priority queue for paid users, I would need to be able to run two queues at the same time, which I cannot do locally with just one GPU.

To fix this issue for production, I would need to use a service that offers GPU time, such as "Hugging Face." These services offer multiple plans with different tiers of GPU. They also allow for scaling usage depending on needs, which could be a very useful feature if the application ever gets popular.

Lessons Learned

There is one choice I regret to have made in the design process of this app: the tech stack. With the current tech stack, I do not have the ability to access user files. This means that I can't save the final images to the user device automatically, and I can't retrieve them for a “gallery” feature. To fix this, I would need to use online hosting like Firebase, which could become costly over time to store a lot of images.

If I were to attempt this project again, I would use a tech stack meant for mobile applications. Dioxus, in particular, caught my eye. It's a Rust library for creating web, desktop, and mobile applications. It works very similarly to React, and most importantly, the same codebase can be used to deploy to the web, desktop, and mobile, which gives me the ability to interact with the user's storage for the gallery feature.

Output Examples